ComfyUI Integrates OpenAI's Latest Image Generation Model: GPT-Image-1

AGI (All Ghibli Images) is coming to a ComfyUI instance near you!

OpenAI just announced GPT-Image-1 API (the same model that powered the ChatGPT 4o image model) 10 minutes ago. We’re excited to share that ComfyUI now supports the latest OpenAI image generation model via our native API Nodes (beta) — allowing you to access state-of-the-art capabilities directly inside your node graph without complicated API keys.

This feature is now in beta, and we’re opening it up for early experimentation.

GPT-Image-1 Highlights:

OpenAI’s multimodal flagship that is behind GPT-4o:

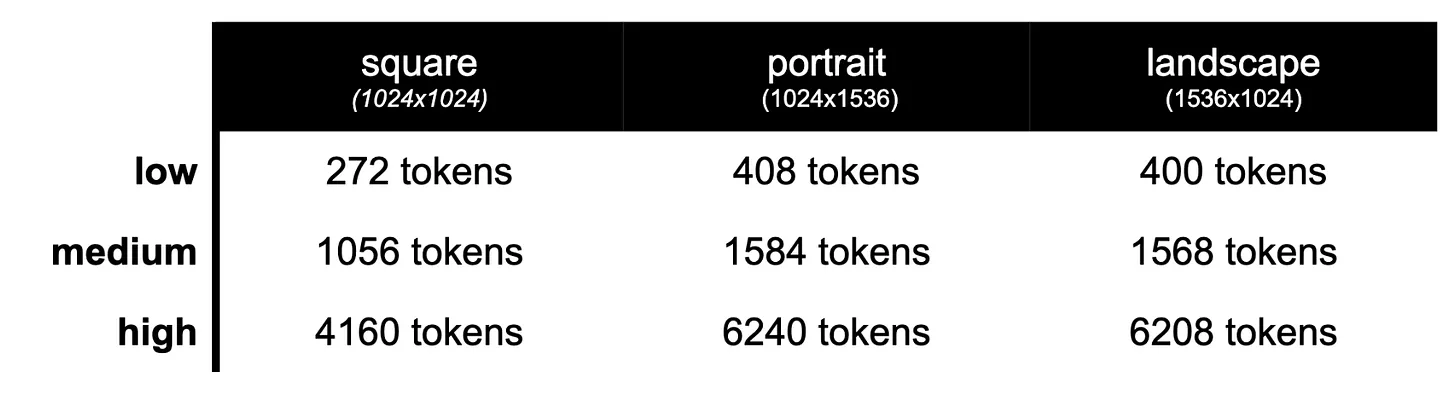

Supports 3 image dimensions: Square (1024×1024), Portrait (1024×1536), Landscape (1536×1024), and Auto

Three quality levels: low, medium, high

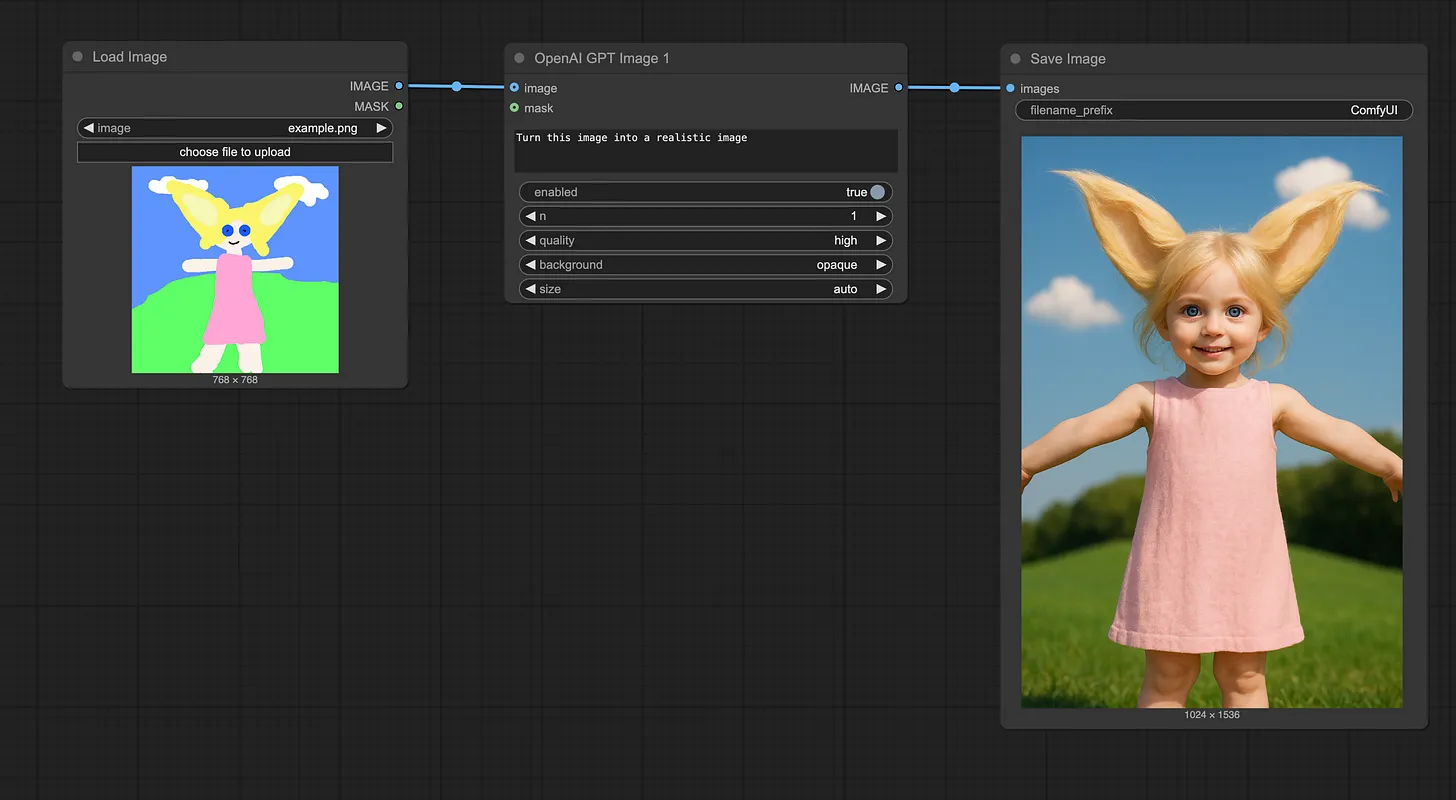

Supports transparent backgrounds and mask image editing in ComfyUI

To try out this integration, you’ll need to register/log in to your Comfy Org account. This is required because the model runs through OpenAI’s paid API service. The API integration is optional and designed for access to external models.

ComfyUI will always remain fully open source and free for local users.

Get Started

Update ComfyUI or Desktop to the latest.

Log In: To use the API nodes, you need to be logged in. Go to Settings → User → Login. Don’t have an account? Hit Create New Account.

Top Up Credits: Settings → Credits → Buy Credits. We use a prepaid model and credits will only be consumed for each run. No surprise charges.

Add the “OpenAI GPT Image 1“ node to your canvas and run!

Image input and mask editing are also enabled by adding a “Load Image“ node.

Workflow Showcases

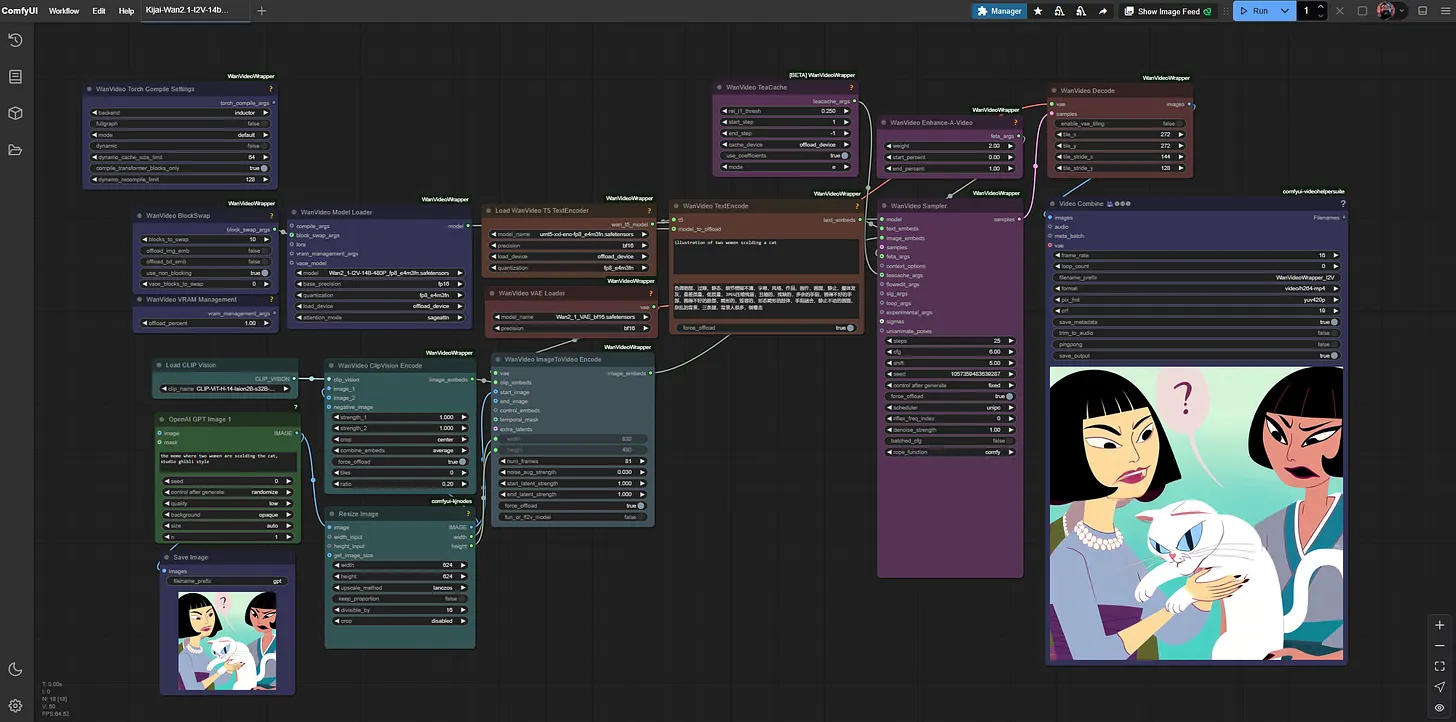

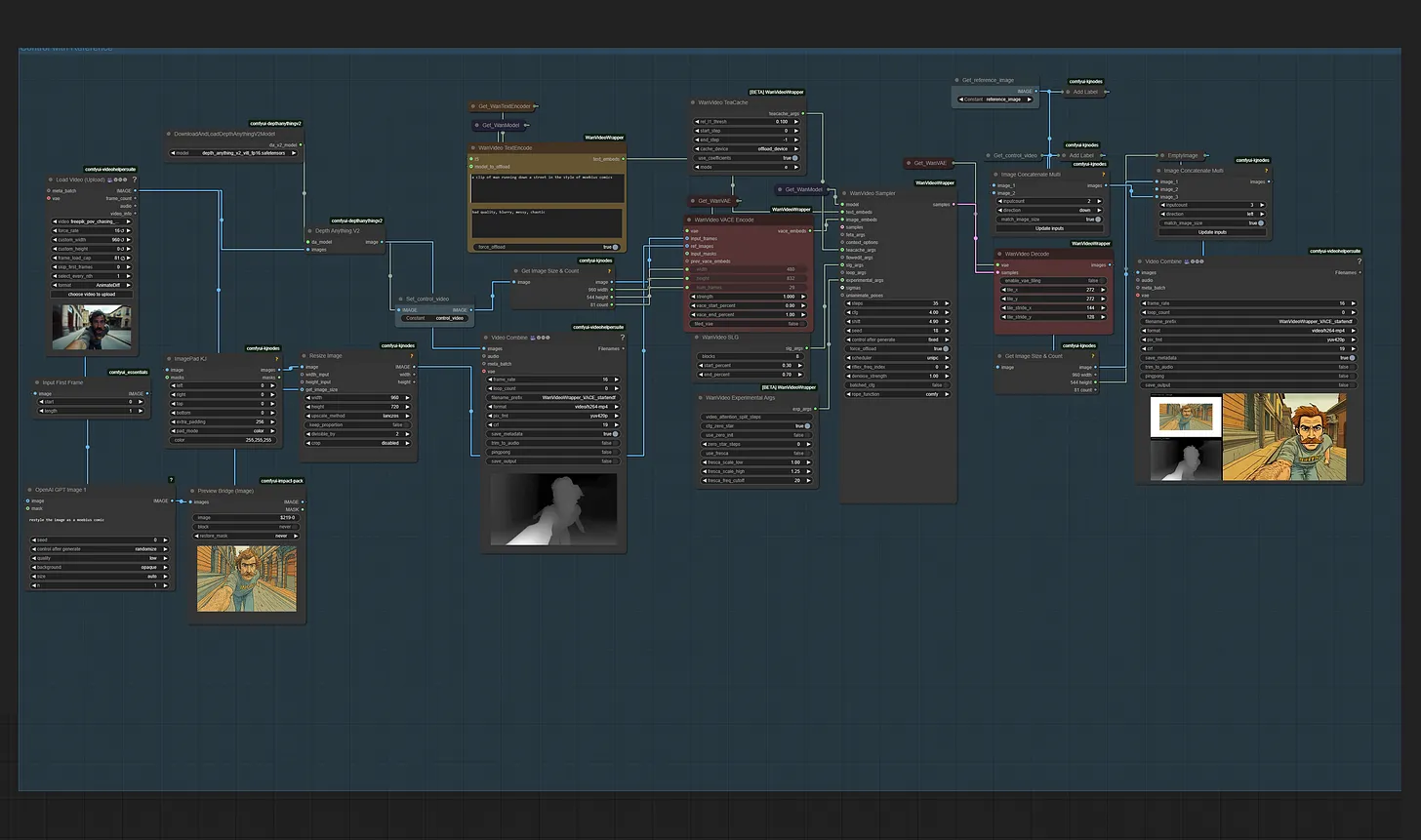

One of the most exciting use cases now is combining powerful external models like gpt-image-1 with existing local models and local workflows.

In this example workflow, we used OpenAI’s image generation API to create a restyled image from an image input and text prompt, then passed it through a local ComfyUI Wan2.1 image-to-video or Wan2.1 VACE control video generation workflow.

GPT Image 1 + Wan2.1 I2V Workflow

GPT Image 1 + Wan2.1 VACE Control Workflow

Token Cost & Quality Options

$5/1M text tokens for input, $10/1M image tokens for input, and $40/1M image tokens for output, same as OpenAI’s listing price.

Token Cost by Resolution and Quality:

More Models Coming Soon

This is just the beginning. We’re actively expanding support for a broader range of external models — so stay tuned for upcoming integrations that will further unlock the potential of ComfyUI as a universal generative AI interface.

As always, we’d love to hear your thoughts and use cases — your feedback helps shape the future of ComfyUI!