Unlock Next-Level Video Generation with ComfyUI's LTX-Video 0.9.5 Integration

We're thrilled to announce ComfyUI now fully supports LTX-Video 0.9.5! This major update to the lightning-fast LTXV series brings a new level of control: multiple key frame control, improved quality, and longer videos. These are now fully enabled in ComfyUI!

Subscribed

Highlights of LTX-Video 0.9.5

1. Commercial Licensing: LTXV 0.9.5 introduces the OpenRail-M license, allowing use of generated videos in commercial projects.

2. Key Frame Conditioning

Control start and end frames

Add multiple key frames

3. Improved Quality

Higher resolution videos

Support for longer sequences

Fewer visual artifacts

Better prompt understanding

4. Generation Speed: LTXV continues to deliver impressive generation speeds on local GPUs, balancing quality with performance.

Get Started in ComfyUI

Update ComfyUI to the latest or download the latest Desktop app.

Download the following 2 files:

Download ltx-video-2b-v0.9.5.safetensors → Place in

ComfyUI/models/checkpointsDownload t5xxl_fp16.safetensors or t5xxl_fp8_e4m3fn_scaled.safetensors → Place in

ComfyUI/models/text_encoders

Use the following example workflows:

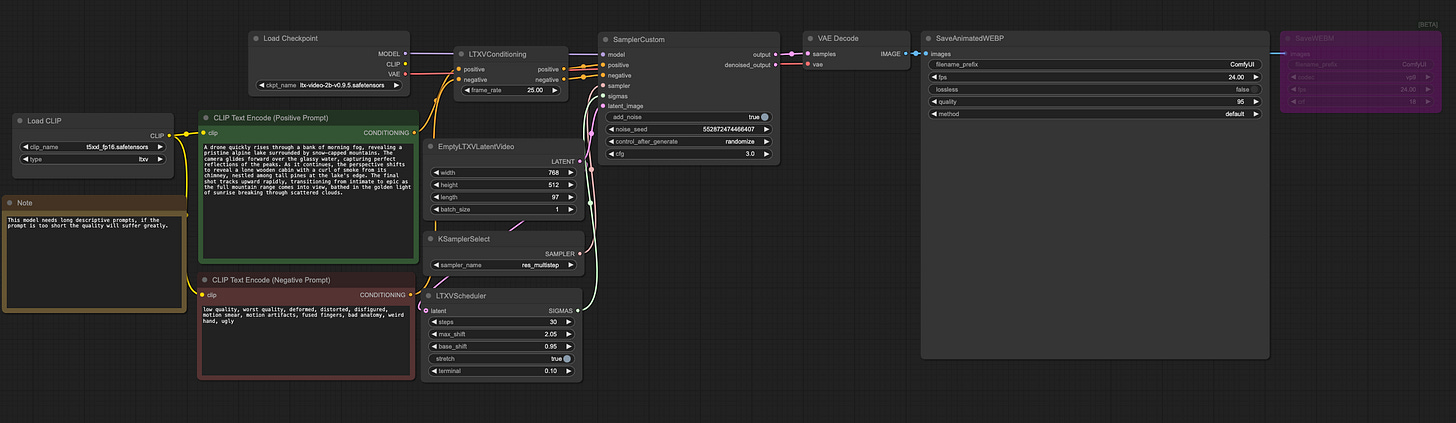

1. Text-to-video workflow

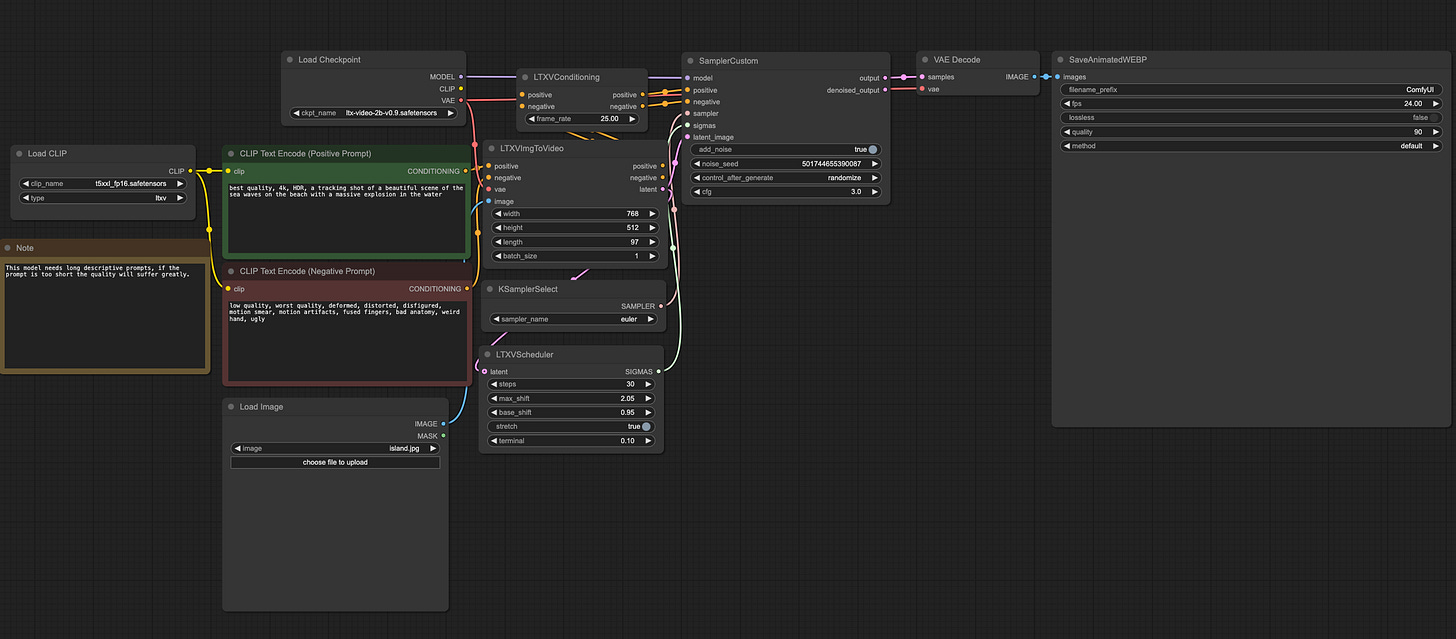

2. Simple image-to-video workflow

Simple image-to-video workflow to have first frame control:

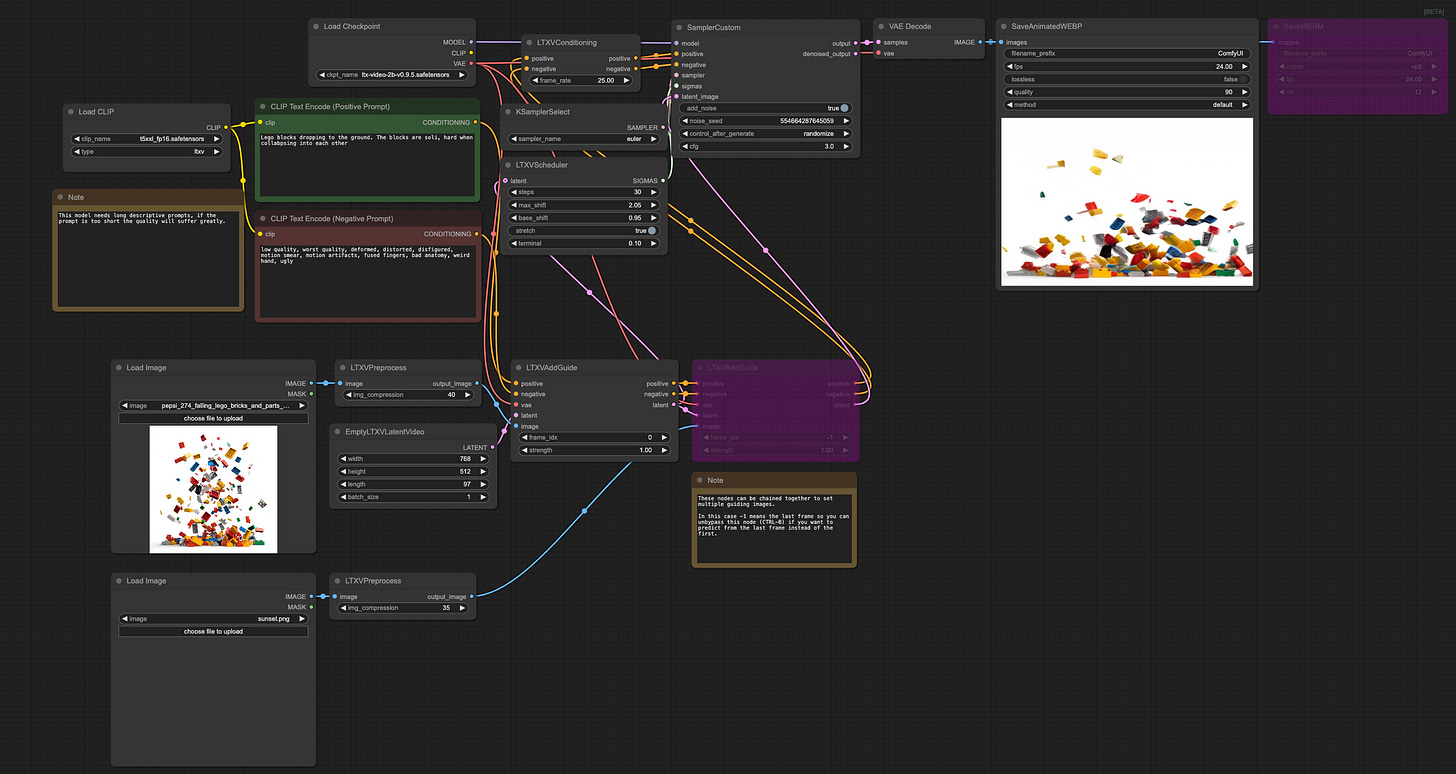

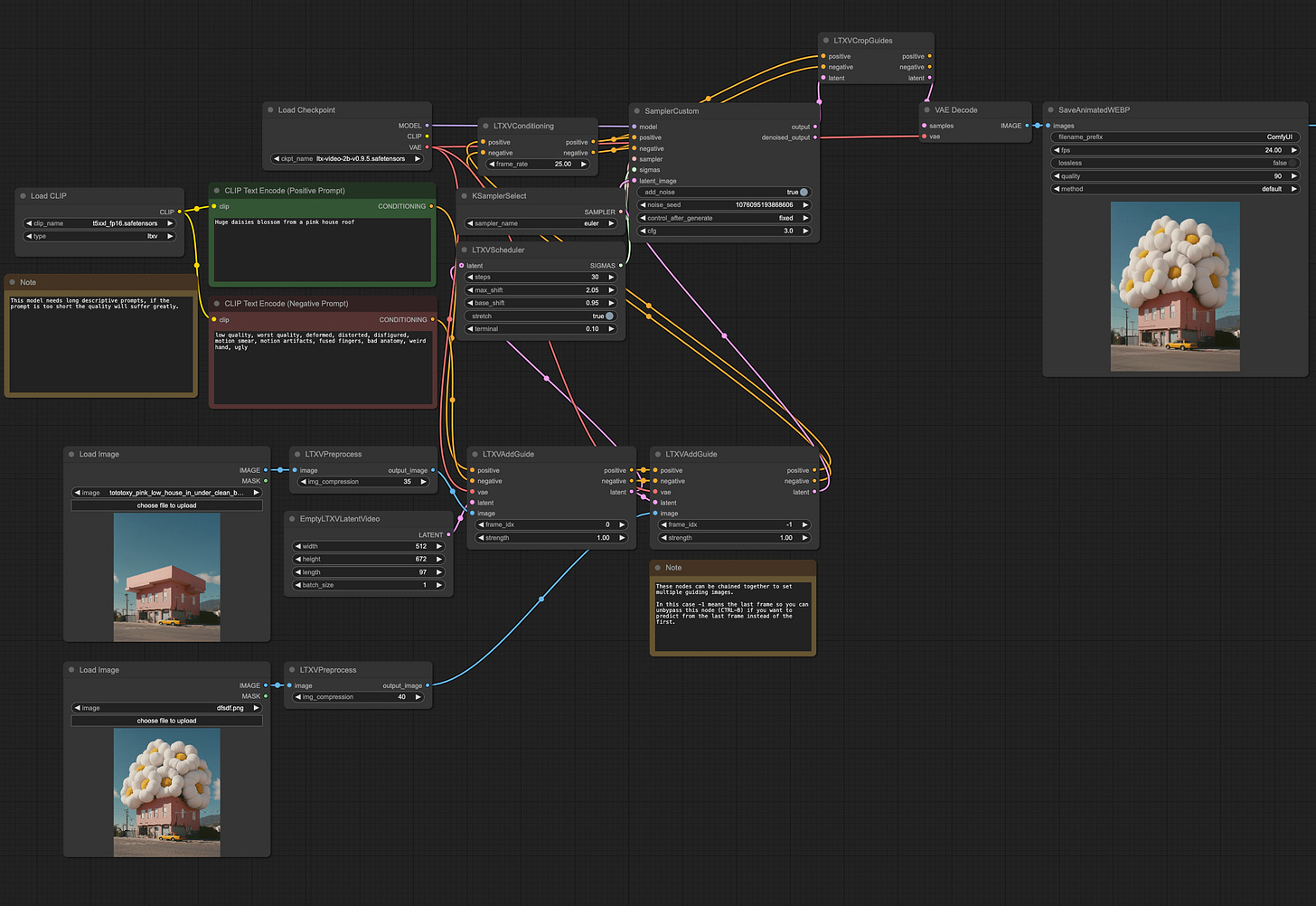

3. Multi-frame control workflow

Multi-frame image-to-video workflow: This workflow can be used for image to video generation, including first frame, end frame, or other multiple key frames.

Keep only the first frame control to do typical image-to-video:

Use the same workflow and enable multi-frame control:

These “Load image→ LTXVPreprocess → LTXVAddGuide“ nodes can be chained together to set multiple guiding images.

In this case, -1 means the last frame, so you can unbypass this node (CTRL-B) if you want to predict from the last frame instead of the first.

We see big potential in the flexibility of multi-frame control for open models. Can’t wait to see more example tests from the community!

Enjoy creation!