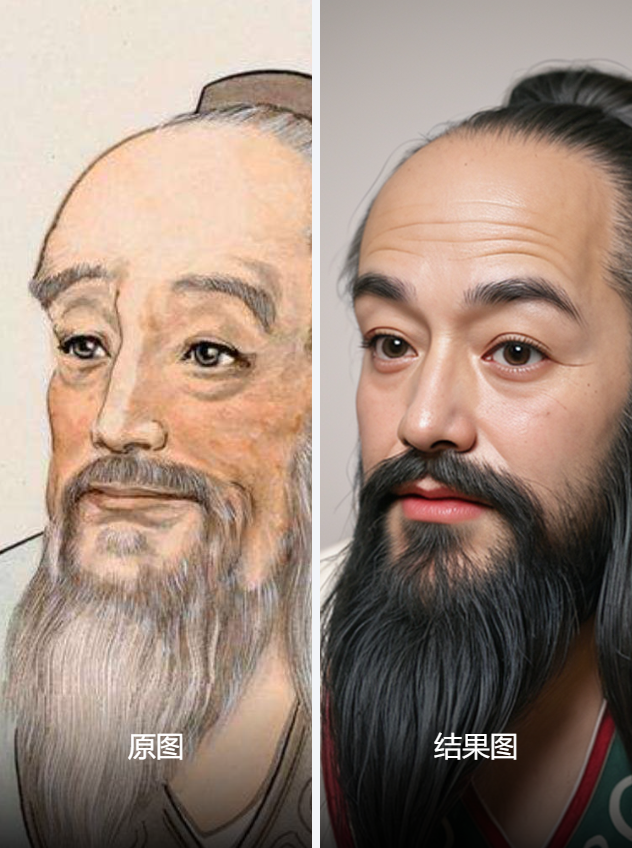

The Art of Revival: Using AI to Restore Historical Portraits from Paintings and Statues

📝 Workflow Overview

This workflow aims to restore historical figures from statues or paintings into realistic portraits using ControlNet and Stable Diffusion.

It utilizes line art, depth, and pose control to ensure the generated image closely matches the input, while text prompts and style selection further enhance the final result.

🧠 Core Models

1️⃣ Stable Diffusion (UNet)

Function: The primary image generation model that creates new images based on input controls and text descriptions.

Model Used:

LEOSAM's MoonFilm |胶片风真实感大模型_2.0Installation:

Install via ComfyUI Manager.

Or manually download

.safetensorsand place it inmodels/checkpoints.

2️⃣ ControlNet (Control Networks)

Function: Controls the structure of the generated image to match the reference.

Models Used:

control_v11p_sd15_lineart(Line Art Control)control_v11f1p_sd15_depth(Depth Control)control_v11p_sd15_openpose(Pose Control)

Installation:

Requires ControlNet plugin.

Place

.pthfiles inmodels/controlnet.

3️⃣ VAE (Variational Autoencoder)

Function: Improves image quality by enhancing color richness and fine details.

Model Used:

vae-ft-mse-840000-ema-pruned.safetensorsInstallation:

Install via ComfyUI Manager.

Or manually download

.vae.ptand place it inmodels/vae.

4️⃣ CLIP (Text Encoder)

Function: Converts text prompts into vectors to guide image generation.

Installation:

Install via ComfyUI Manager.

Or manually download

.ptfiles and place them inmodels/clip.

📦 Key Components (Nodes)

Node | Function |

|---|---|

| Loads the Stable Diffusion model. |

| Loads the VAE model. |

| Loads the ControlNet models. |

| Loads the reference image (ancient statue or painting). |

| Extracts line art or depth information from the image. |

| Processes text prompts to guide generation. |

| Applies ControlNet transformations to the generation process. |

| Handles image sampling and generation. |

| Converts the latent space back into a final image. |

| Saves the generated image. |

| Previews the generated output. |

📂 Major Workflow Groups

1️⃣ Line Art Control

Function: Ensures the outline of the generated image matches the input.

Key Components:

ControlNetLoader (control_v11p_sd15_lineart)AIO_Preprocessor (AnyLineArtPreprocessor_aux)ControlNetApplyAdvanced

2️⃣ Depth Control

Function: Uses depth information to maintain a realistic 3D structure.

Key Components:

ControlNetLoader (control_v11f1p_sd15_depth)AIO_Preprocessor (DepthAnythingPreprocessor)ControlNetApplyAdvanced

3️⃣ Pose Control

Function: Ensures the final pose matches the reference image for better restoration.

Key Components:

ControlNetLoader (control_v11p_sd15_openpose)DWPreprocessorControlNetApplyAdvanced

🔢 Inputs & Outputs

📥 Main Inputs

Reference Image (statue or painting for restoration)

ControlNet Options (Line Art, Depth, Pose)

Sampling Parameters:

Seed Value(randomization control)Sampling Method(DPM++ 2M, Euler, etc.)Sampling Steps(default 30 steps)

Text Prompt (for style refinement)

📤 Main Outputs

Restored, high-quality portrait

Comparison between input and generated images

Style variations (e.g., film-like restoration)

⚠️ Important Considerations

Hardware Requirements

Requires at least 8GB GPU (12GB+ recommended).

ControlNet consumes significant VRAM; disabling some may help.

Model Compatibility

Using film-style models (e.g.,

LEOSAM's MoonFilm) improves realism.ControlNet modules (Line Art, Depth, Pose) can be selectively enabled.

Prompt Optimization

Example prompts:

"1 old man, long hair, black hair, beard, realistic, hanfu"Add

"photography level, cinematic lighting"for better realism.

Sampling Parameters

30–50 steps yield optimal results.

DPM++ 2Musually produces better quality than Euler.

Conclusion

This ComfyUI workflow revives ancient figures into realistic portraits by combining ControlNet for structure control and style refinement techniques.

It is ideal for historical character restoration, artistic reconstructions, and AI-generated historical portraits.

Let me know if you need further explanations! 🚀