Unlock the Power of Video-to-Animation: A Comprehensive Pipeline Guide

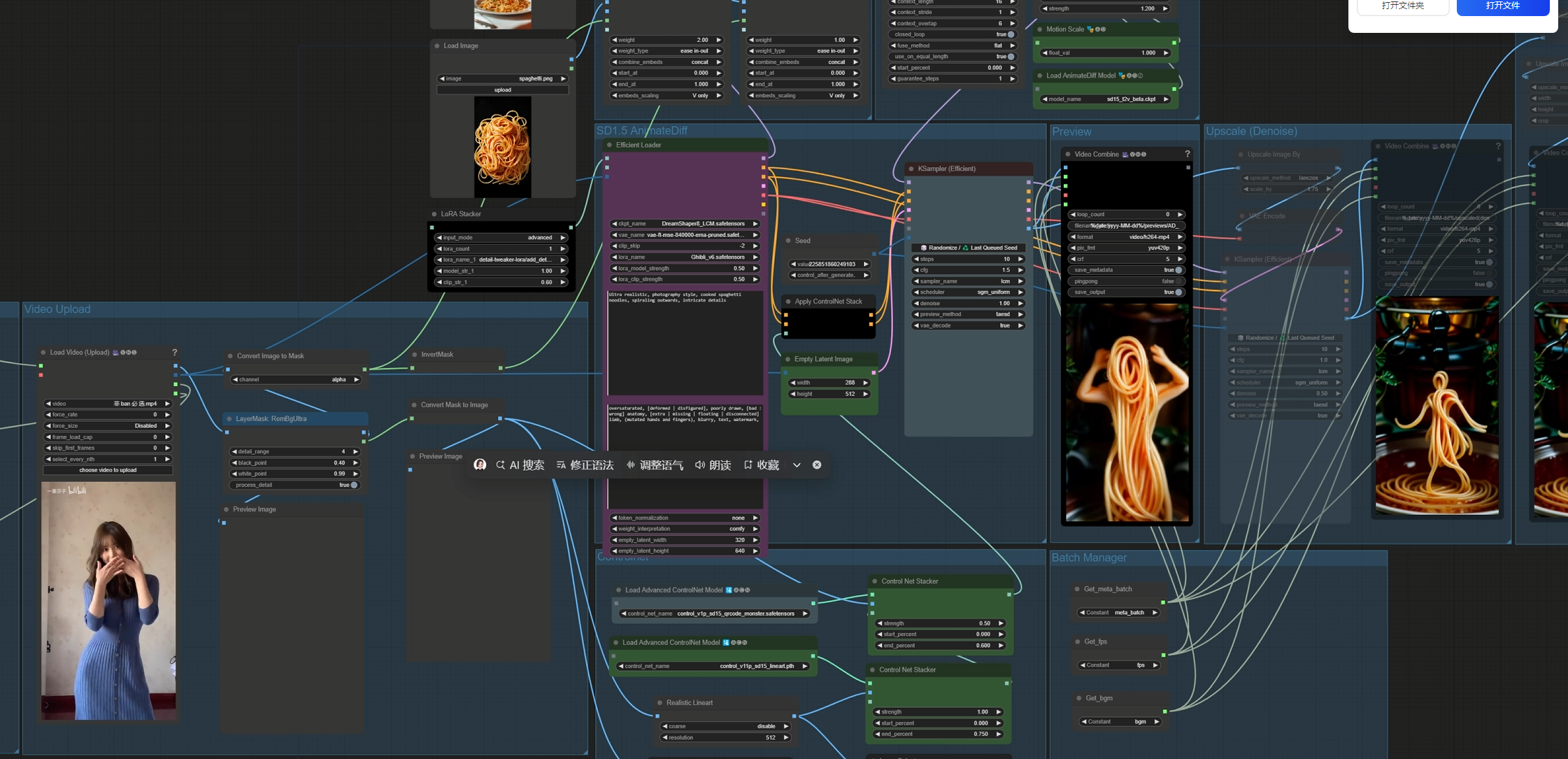

1. Workflow Overview

This is an advanced video-to-animation pipeline that:

Processes input video frames through AI-powered animation

Leverages AnimateDiff for motion generation

Uses ControlNet for structural guidance

Applies IPAdapter for style transfer

Outputs high-quality videos with upscaling and frame interpolation

Key Features:

Video frame extraction and background removal

Dual ControlNet guidance (line art + QR monster style)

Multi-stage upscaling (model-based + traditional)

Frame interpolation for smooth motion

2. Core Models

Model | Purpose | Source | Required Files |

|---|---|---|---|

DreamShaper8_LCM | Base image generation (LCM-optimized) | CivitAI |

|

AnimateDiff | Motion generation | GitHub |

|

ControlNet | Structure control | HuggingFace |

|

IPAdapter PLUS | Image-prompt conditioning | GitHub |

|

RIFE | Frame interpolation | GitHub |

|

3. Key Nodes Breakdown

Essential Nodes

Node | Function | Installation Source |

|---|---|---|

VHS_LoadVideo | Video frame extraction |

|

RemBgUltra | Background removal | Manual install (GitHub) |

ADE_AnimateDiffModel | Motion model loader |

|

IPAdapterAdvanced | Image prompt processing |

|

RIFE VFI | Frame interpolation |

|

Critical Dependencies

AnimateDiff Requirements:

Motion modules (e.g.,

mm_sd_v15.ckpt)Must match SD1.5 model architecture

IPAdapter Requirements:

CLIP Vision model (

CLIP-ViT-H-14-laion2B-s32B-b79K)Image encoder files

ControlNet Models:

Must be SD1.5-compatible versions

4. Workflow Structure

Processing Groups

Group | Function | Inputs | Outputs |

|---|---|---|---|

Video Input | Frame extraction | MP4 video | Individual frames |

Mask Processing | Background removal | Raw frames | Transparency masks |

IPAdapter | Style conditioning | Reference images | Style-embedded model |

ControlNet | Structure guidance | Line art/masks | Controlled generation |

AnimateDiff | Motion generation | Processed frames | Animated latent |

Upscaling | Quality enhancement | Low-res frames | HD frames |

Interpolation | Frame smoothing | Original frames | High-FPS video |

Data Flow

[Video Input] → [Frame Extraction] → [Mask Generation]

↓

[IPAdapter] → [AnimateDiff] → [ControlNet Processing]

↓

[Initial Generation] → [Upscaling] → [Interpolation] → [Final Video]5. Inputs & Outputs

Required Inputs

Source Video:

Format: MP4 (H.264 recommended)

Example:

非 ban 必 选.mp4

Reference Images:

For IPAdapter style transfer

Example:

spaghetti.png

Text Prompts:

Positive: "Ultra realistic, photography style..."

Negative: "oversaturated, [deformed | disfigured]..."

Key Parameters:

Initial resolution: 512×96

Final resolution: 1080p

Seed: 225851860249103 (or random)

Generated Outputs

Video Versions:

Preview (low-res)

Upscaled (model-based)

Interpolated (smooth motion)

Formats:

MP4 with H.264 encoding

Metadata preservation

6. Critical Notes

Hardware Requirements

Minimum: NVIDIA GPU with 12GB VRAM

Recommended: 16GB+ VRAM for full resolution

Common Issues & Fixes

VRAM Overflow:

Reduce

batch_sizein EmptyLatentImageEnable

--medvramflag

Missing Models:

Ensure all

.ckpt/.safetensorsfiles are in:models/checkpoints(base models)models/controlnet(ControlNet)models/ipadapter(IPAdapter)

Plugin Conflicts:

Update all dependencies via ComfyUI Manager:

git pull && python -m pip install -r requirements.txt

Optimization Tips

For faster generation:

Use LCM-LoRA with reduced steps (10-15)

Disable unused ControlNets

For higher quality:

Enable Tiled VAE for upscaling

Use 2-pass interpolation

7. Installation Guide

Step-by-Step Setup

Install core dependencies:

cd ComfyUI/custom_nodes git clone https://github.com/Kosinkadink/ComfyUI-AnimateDiff-Evolved.git git clone https://github.com/Fannovel16/ComfyUI-Frame-Interpolation.gitDownload required models:

AnimateDiff: Official GitHub

ControlNet: HuggingFace

Configure paths in

extra_model_paths.yaml:ipadapter: base_path: models/ipadapter animatediff: motion_models: models/motion

This workflow demonstrates professional-grade video animation with ComfyUI. For real-time adjustments, monitor VRAM usage and consider progressively enabling components during testing.