Revamp Your Visuals: Inpainting, Style Transfer, and Auto-Captioning Made Easy

1. Workflow Overview

This is an SDXL-based inpainting and style transfer workflow designed for:

Local Inpainting: Fix defects or remove unwanted elements (e.g., watermarks).

Style Transfer: Apply reference image styles via IPAdapter and ControlNet.

Auto-Captioning: Generate prompts using Meta-Llama-3 for better text guidance.

2. Core Models

Model Name | Function |

|---|---|

DreamShaper XL v2.1 Turbo | Main checkpoint for high-quality image generation. |

xinsir_controlnet_depth_sdxl | ControlNet model for depth-aware structure control. |

IPAdapter (PLUS) | Style transfer by injecting reference image features. |

MAT_Places512_G_fp16 | Inpainting-specific model for optimal repairs. |

3. Key Nodes

Node Name | Function | Installation |

|---|---|---|

| Loads Fooocus inpainting models. | Via ComfyUI Manager |

| Advanced style transfer control. | Requires |

| Applies ControlNet depth conditioning. | Built-in (model download required). |

| Generates captions using Llama-3. | Install |

| Auto-calculates optimal inpainting resolution. | Built-in. |

Dependencies:

IPAdapter Models: Download

ip-adapter-plus_sdxl_vit-h.bintomodels/ipadapter.ControlNet Models: Place

xinsir_controlnet_depth_sdxl_1.0.safetensorsinmodels/controlnet.

4. Workflow Structure

Group 1: Auto-Captioning

Input: Original image (via

LoadImage).Output: Generated caption text.

Key Nodes:

Joy_caption_load,Joy_caption.

Group 2: Preprocessing

Input: Image + mask.

Output: Cropped image and expanded mask.

Key Nodes:

CutForInpaint,GrowMask.

Group 3: Inpainting & Style Transfer

Input: Preprocessed image + ControlNet depth + IPAdapter reference.

Output: Repaired image.

Key Nodes:

KSampler,IPAdapterAdvanced,INPAINT_ApplyFooocusInpaint.

Group 4: Post-Processing

Input: Original and inpainted images.

Output: Blended result (

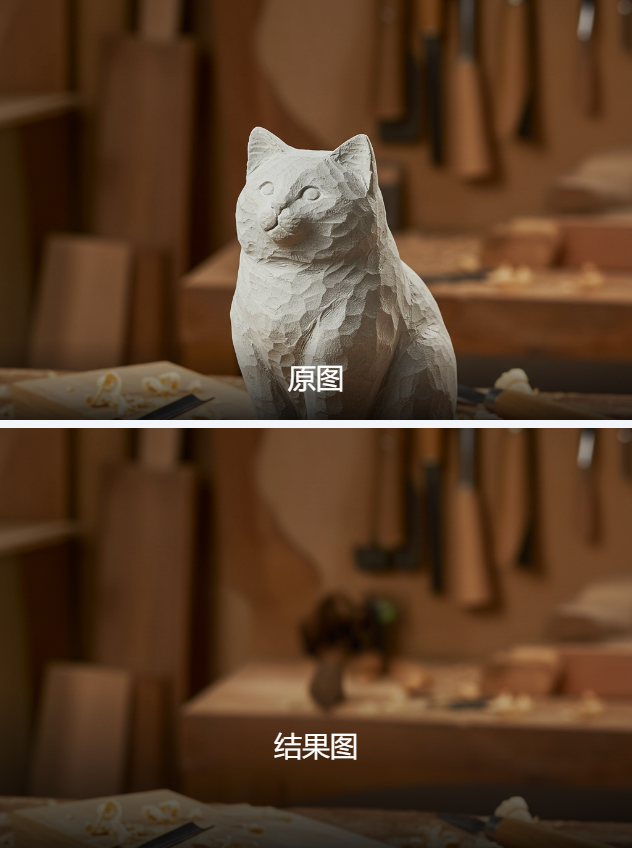

BlendInpaint) and comparison view (Image Comparer).

5. Input & Output

Input Parameters:

Image: Must include a mask (marking areas to repair).

Resolution: Default

2048x2048(auto-optimized byPixelPerfectResolution).Prompts: Auto-generated by

Joy_captionor manually entered.

Output: Final inpainted image (PNG) with comparison tool.

6. Notes

VRAM: ≥12GB GPU recommended (SDXL + ControlNet + IPAdapter are resource-heavy).

Common Errors:

Missing models trigger red error boxes—verify model paths.

Incorrect mask coverage leads to artifacts.

Optimization:

Enable

xformersfor faster generation.Reduce

KSamplersteps (e.g., from 30 to 20) to save time.